12 Tue

TIL

[AI 스쿨 1기] 6주차 DAY 2

선형회귀(Linear Models for Regression)

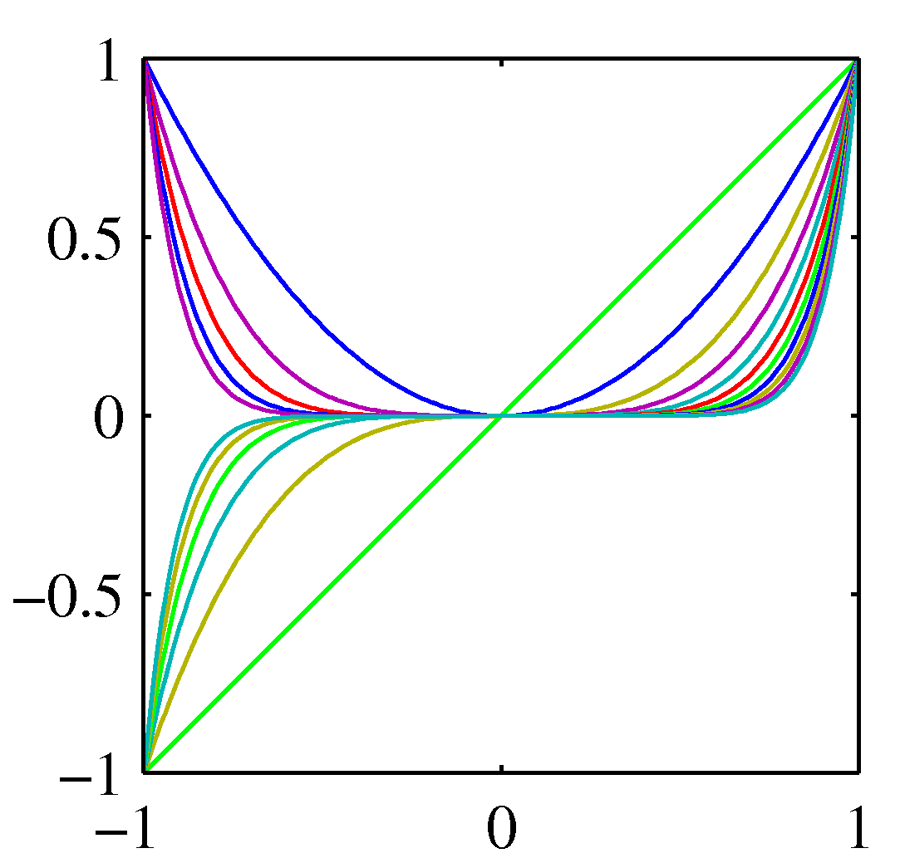

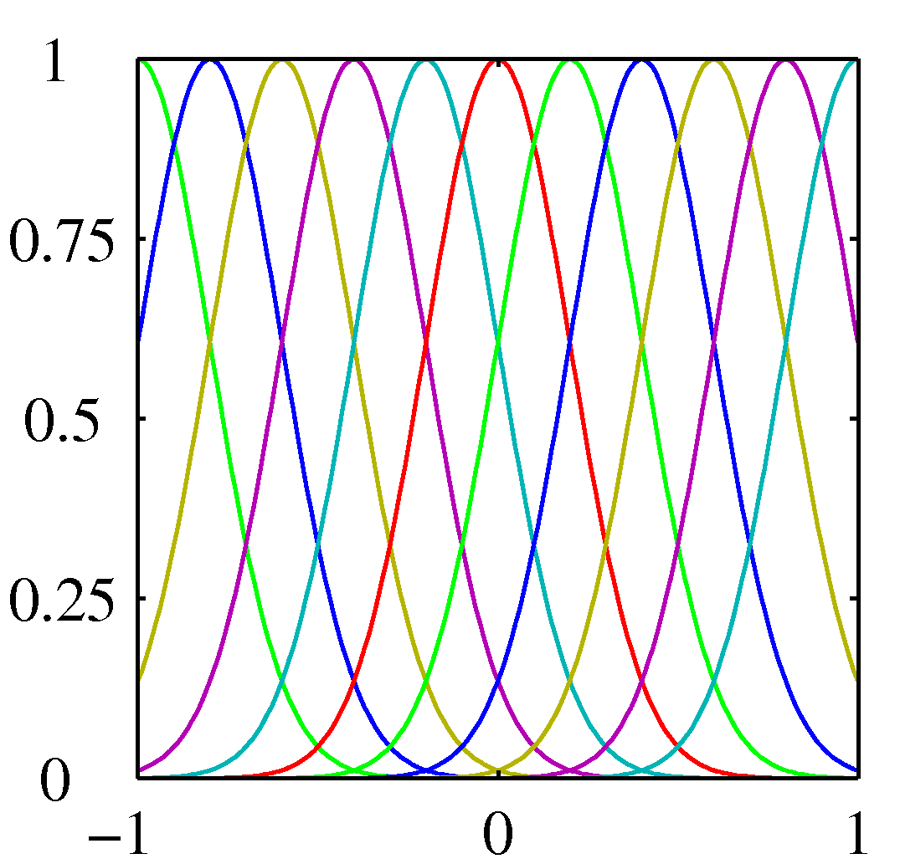

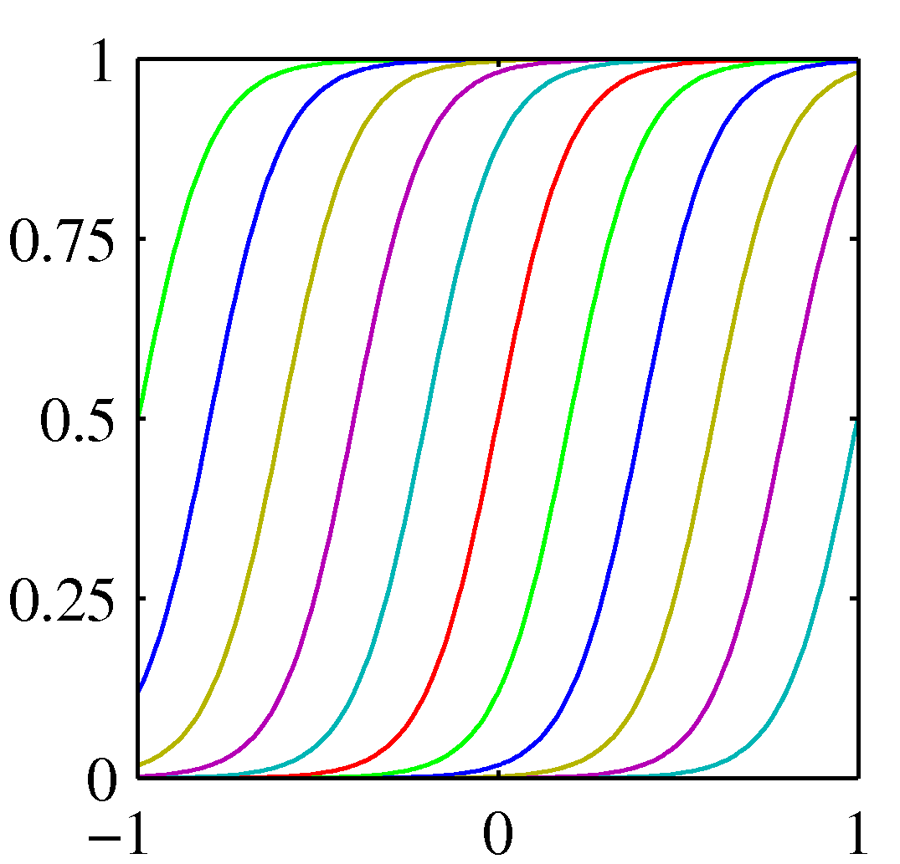

선형 기저 함수 모델

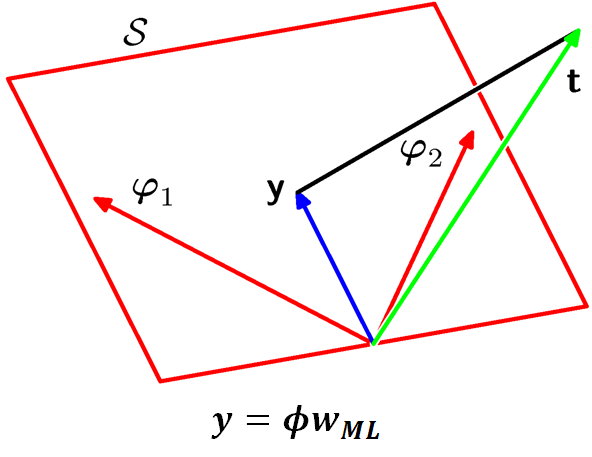

최대우도와 최소제곱법(Maximum Likelihood and Least Squares)

최대우도추정법을 통한 최적의 w 구하기

온라인 학습 (Sequential Learning)

데이터의 사이즈가 너무 크면 계산이 어려움 -> 여러 대안이 존재, 그 중 하나

갖고있는 학습데이터를 조금 나눠서 조금씩 업데이터 진행

데이터가 아무리 크더라도 어느정도 모델 학습 가능

시간은 많이 걸리더라도, 메모리에 대한 부담은 ↓

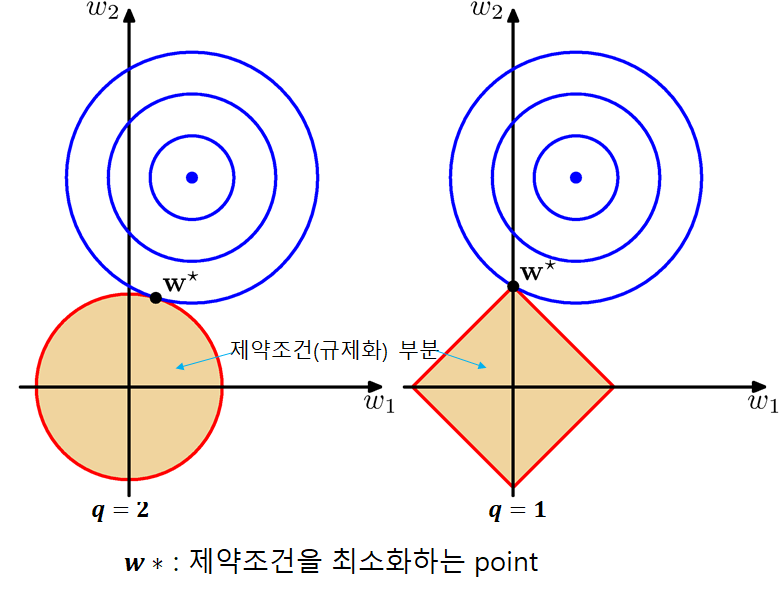

규제화된 최소제곱법(Regularized Least Squares)

편향-분산 분해(Bias-Variance Decomposition)

모델 과적합에 대한 이론적인 분석

/ 빈도주의 방법...

베이지안 선형회귀(Bayesian Linear Regression)

위에서 처럼 빈도주의방법으로는 모델의 불확실성을 나타내기 힘듦❗ 베이지안 선형회귀를 통해 훨씬 더 불확실성을 깔끔하게 다룰 수 있음❗

베이지안 방법은 빈도주의보다 일반적이고, 강력한 방법론❗

예측값의 분포를 구할 수 있음❗

[Statistics 110]

Present Part [2 / 34]

2강- 해석을 통한 문제풀이 및 확률의 공리 (Story Proofs, Axioms of Probability)

10명을 4명과 6명으로 나누어 두 팀을 만드는 경우의 수

10명 중 4명을 뽑은 경우의 수 (410) 와 같다. 4명을 뽑으면 6명이 자동으로 결정되기 때문이다. 또한 이는 10명 중 6명을 뽑은 경우의 수와 같으며 증명하지 않아도 개념적으로 (410)=(610) 라는 것을 알 수 있다.

10명을 5명과 5명으로 나누어 두 팀을 만드는 경우의 수

이는 10명 중 5명을 뽑는 경우의 수(510)와 같은데 여기서 1/2 를 해야한다. 왜냐하면 5명을 뽑으면 자동으로 5명이 결정되는 경우에서 이미 반대편에서도 동시에 5명을 뽑는 경우의 수가 같이 카운트 되기 때문이다. 따라서 중복으로 카운트된 것을 제하기 위해 1/2을 곱해야 한다.

이렇게, 항상 경우를 잘 보고 중복의 여부를 잘 판단해야 한다.

복원 가능, 순서 미고려 (kn+k−1)

다음의 경우의 수가 맞는지를 결정하기 위해 몇가지 케이스를 생각해본다.

k = 0일때,(0n−1) = 1

아무것도 결정하지 않을 때의 경우의 수는 1이 맞다. 0! = 1

k = 1일때, (1n)= n

n개 중 1개를 뽑는 경우의 수는 n개

n = 2일때, (kk+1)= (1k+1)= k+1

n개의 구분 가능한 박스 안에 k개의 구분 불가능한 입자를 넣는 방법

(kn+k−1)

n = 4, k= 6 일때

3 / 0 / 2 / 1

ooo||oo|o => o of k and | of n-1

이는 n+k-1 개의 위치에서 k개의 점을 위치시키는 개념과 동일

점의 위치가 결정되면 분리선의 위치가 자동으로 결정된다

n = 2일때 => 동전을 뒤집는 상황

앞면과 뒷면이 공정한 확률을 가지면 4가지 경우를 가진다

동전 한 개를 두번던지고 이 동전이 절대 구별할 수 없다고 가정하면 실제로는 3가지 경우(?)

이야기 증명(Story Proof)

해석에 의한 증명

ex)초반에 이야기한 10개중 4개를 뽑을 확률 = 10개중 6개를 뽑을 확률

팩토리얼을 가지고 비교하지 않았음

n(k−1n−1)= k(kn)

n명중에서 동아리에 들어갈 k명의 사람을 고르고 이 중 대표 1명을 뽑을 경우의 수

그러나 이는 해석적으로 다르게 말할 수 있다

n명중에서 대표 1명을 뽑고 나머지 중에서 k-1명의 사람을 뽑을 경우의 수

(km+n)= ∑j=0k(jm)(k−jn): 방데르몽드 항등식

이를 증명하려면 팩토리얼을 사용하거나 이항 정리를 이용해야 한다

좌변 : m+n개 중 k개를 고르는 것

우변 : m+n개를 m개와 n개의 그룹으로 나눈 뒤 k명이 되도록 뽑는 것. (이 때 m에서 0명이 뽑히면 n명에서는 자동으로 k명이 뽑히고, m명에서 1명이 뽑히면 n명에서 k-1명이 뽑힌다)

확률

지금까지는 모두 동일한 확률이 발생한다고 가정하고 문제를 해결했으며 그 경우의 수도 유한하다고 가정한 뒤 확률을 정의했다.

간단하지 않은 확률의 정의는 다음과 같다.

확률 공간에는 두 개의 성분 S와 P가 있다.

S는 표본 공간이며 모든 실험이 이루어 질 수 있는 공간.

P는 함수이다.(끄러나 f(x) = x+ 3 같은 함수는 아니다) 어떤 사건을 입력으로 하는 함수이다. P의 정의역은 S의 부분집합이다.

S의 부분집합 A가 있을 때 P(A)는 0부터 1 사이의 수이며, 일반적인 확률은 0과 1사이의 기준이다. 이 때 P를 정의하기 위한 두 가지 정리는 다음과 같다.

P(∅) = 0, P(S) = 1

모든 가능한 결과의 집합이 S일 때, 발생할 수 없는 불가능한 사건이 ∅ 이다.

P(∪n=1∞)=∑n=1∞P(An) if An is disjoint with Am(m=n)

확률의 모든 정의와 규칙은 이 두 정리로부터 파생된다.

Last updated